AI Isn’t a Feature. It’s a Stress Test for Your Architecture

For as long as most of us can remember, every new family of devices has come with its own interface, its own rules, and its own set of headaches for developers.

It happened when the iPhone arrived and Mac developers rushed to port their desktop apps. It happened again with the iPad, and the Apple Watch. More recently, Vision Pro joined the list.

But in my opinion, the real latest victim hasn’t been a new device. It’s been something else entirely: trying to build native AI experiences into our apps.

Not just adding a small chatbot, but creating AI‑first interfaces that can answer questions, reason about data, or act as agents. That’s where many existing architectures start to fall apart.

Building from the UI backwards (and why it hurts)

The root of the problem is how we’ve traditionally built software: from the interface backwards.

A large part of our business logic ends up living inside Views and ViewModels, maybe supported by a small repository layer to read and write from the database. It works… until it doesn’t.

This is why something that should be simple, like adding a widget, suddenly becomes a minor nightmare. And if you want to build an AI‑driven interface, for example a voice agent you can ask “How did I sleep last night?”, things really start to break down.

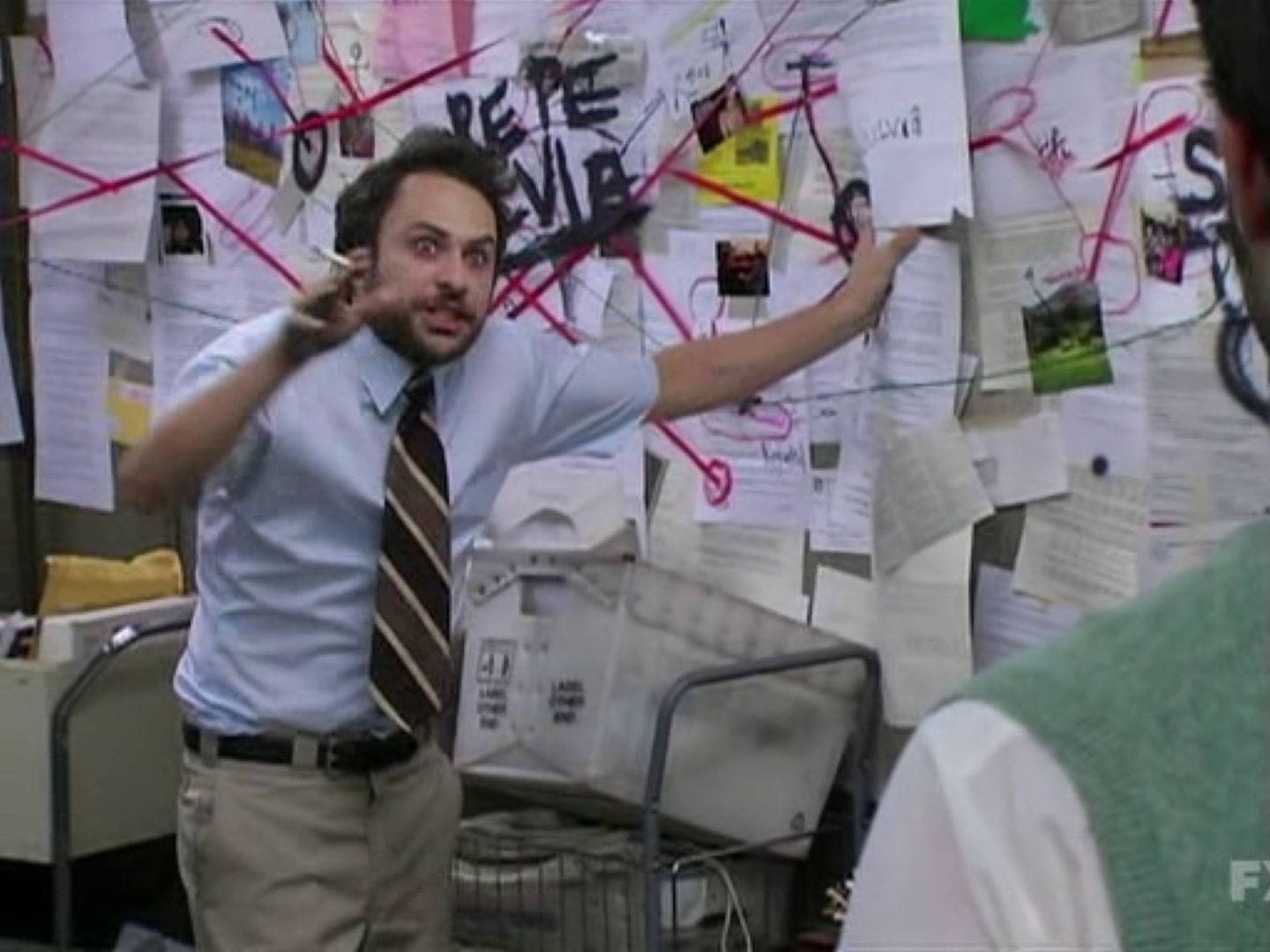

The logic is so tangled with UI concerns that you end up feeling like that famous meme: doing mental gymnastics just to get a Hello World working.

How AI coding agents changed the interface paradigm

Interestingly, the evolution of AI coding agents illustrates why decoupling the core from the interface is so important.

It began with Cursor, which forked VS Code and used the editor as the interface. The editor was central, visible, and familiar, but AI started working inside it.

Gradually, developers shifted to terminal-based tools like Claude Code and Codex, where the UI became minimal and interactions were mostly text in, text out. The interface started fading into the background.

Now, in some companies like Whisper, programmers primarily interact via voice, barely using a keyboard or mouse. Commands are spoken, code changes happen automatically, and the interface is no longer a tool you manipulate but an environment that responds to your core instructions.

This shows that the real value lies in the core logic, not the interface. A system that can only operate via a specific UI will break if that UI changes, but a clean, modular, interface-agnostic core can provide value anywhere.

The age of micro‑services (and micro‑apps)

This is why software architecture matters more than ever, especially in a world dominated by AI.

Tools and agents like Claude Code can write classes and functions faster than any human. But if you don’t give them a clear structure and boundaries, you’ll end up with an unmaintainable mess before you even realize what happened.

As an alternative to monolithic apps, we have architectures based on micro‑services (or micro‑apps). The idea is simple: each piece of functionality lives in its own module.

These modules contain classes and models that are:

- Independent from each other

- Independent from any interface

- Completely unaware of whether they’re being used by a screen, a widget, or a voice assistant

They only speak in terms of APIs.

Each service focuses on one very specific responsibility: logging in, analyzing sleep data, syncing workouts… you get the idea.

The result is a rock‑solid core that you can reuse across platforms, devices, and even across different apps.

In recent years, Swift has taken huge steps toward becoming a true cross‑platform language. It’s no longer just for iPhones.

Today, Swift runs on microcontrollers, servers, Windows, and even Android. That means if your micro‑service architecture is written in Swift, you can share the same business logic across platforms while still building fully native interfaces. In other words: the best of both worlds.

FitWoody 2.0: laying the foundations for the future

At first glance, the biggest change in FitWoody 2.0 might seem like the new interface. In reality, the real transformation happened under the hood.

Over several months, we rewrote every part of FitWoody to adapt it to this new way of building software. The app is now structured around more than 20 independent micro‑services.

It was a massive effort, but the payoff is flexibility and speed.

Want to add widgets? We mostly need to care about the new lifecycle. A large part of the UI and all the business logic are already there.

Want to update the sleep analysis module? We can do it without worrying about breaking four other parts of the app. In fact, we were able to run it quietly in the background, validating that everything worked correctly before it was even visible to most users.

From here, the possibilities are wide open.

From exposing an MCP so your favorite AI can help you get fitter, to building versions of FitWoody for devices we don’t even support today.

I don’t know whether smartphones will eventually give way to augmented‑reality glasses, or whether we’ll end up living in something closer to Her. But one thing feels clear: the best way to prepare for that future is to make sure your app doesn’t depend on a single interface or a specific lifecycle.

Because there’s a good chance that, five years from now, the touchscreen in our pockets won’t be the queen anymore.

P.S. If you’re still not convinced that AI has changed everything, I highly recommend listening to this podcast by Javier Cañada.

Member discussion